• Unleash your benefits with a PFF+ subscription: Get full access to all of our seasonal fantasy tools, including weekly ratings, WR/CB Matchup Schedules, weekly forecasts, Launch and squat optimizer and much more. Register now!

Estimated reading time: 8 minutes

Welcome to the second part of my series on statistical modeling. If you missed the first part, you can watch it. Here.

This week we’ll focus on improving our model using personal observations and expert feedback. I got valuable information from smart guys like PFF’s data scientist. Timo Riske and NFL analytics pioneer Josh Hersmeier.

Key feedback included:

• A solution to the “predicting next week” problem that goes beyond typical regression: For example, if a player sees 25 targets in a week nwe can expect fewer targets this week n+1 purely because of regression. The challenge is to ensure that our model accounts for this in a meaningful way.

• Target depth taken into account to model: This involves considering the depth of both predicted and actual targets.

Personal adjustments I plan to make include:

• Focusing on target shares relative to team passing attempts. rather than the original targets. This adjustment should help account for differences in game scenarios and pass frequency, such as when teams like the Falcons throw the ball 58 times per game.

Before diving into these changes, it’s worth noting:

• If you rely on metrics such as target share or Air Yards share in your analysis, What I built using route-level PFF data is superior on all fronts and should get your attention.

Prove that our model is not just a typical regression.

For those who may not be familiar, the term “regression” in this context simply refers to the tendency of a player’s performance to revert to its average level.

This can work in any direction – positive or negative. For example, if player X typically averages a target share of 0.30 each week but drops to 0.10 in week n, we would expect him to return to his average of 0.30 in week n+1, assuming that in there will be no significant change in his role or situation. They may even see a small jump above 0.30 in that particular n+1 week.

One simple way to test our model is to use our forecast target share to predict next week’s target share, and then compare those forecasts to standard target share measures.

If our model is better at predicting future target shares, this clearly indicates that our approach is good.

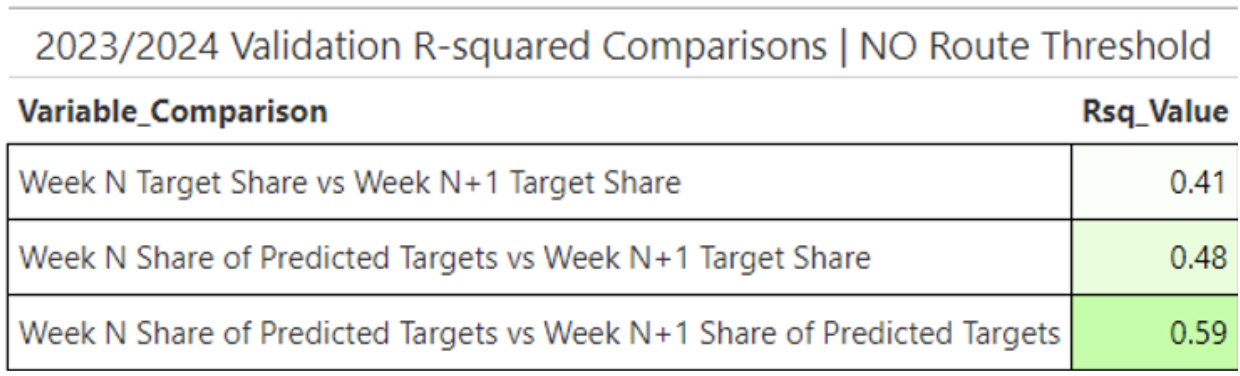

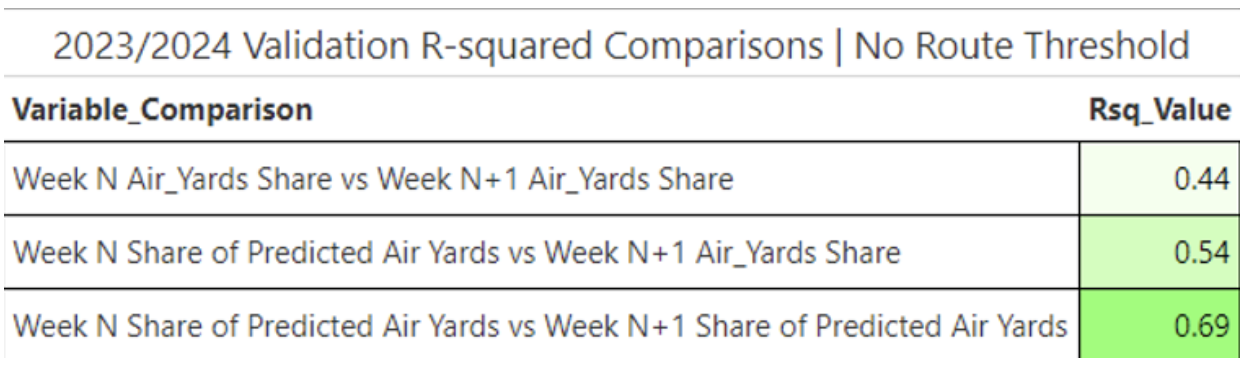

This table provides a lot of valuable information, so let’s break it down:

• Target share explains 41% of the variance Target share next week. It is worth noting that Target share is one of the most consistent indicators of opportunity in football. In the world of sports analysis, few indicators reach an Rsq higher than 0.41.

• Our Proportion of predicted targets The indicator – (Predicted Goals)/(Sum of Team Forecast Goals) – explains an additional 7% of the variance compared to Actual target share when forecasting Actual target share next week. This improvement may seem small, but it is a significant step forward in predictive modeling.

• Moreover, Proportion of predicted targets demonstrates much greater stability than Target sharewith an improvement of 0.18 in Rsq. This means it is a more consistent forecast over time, offering a clearer picture of future opportunities.

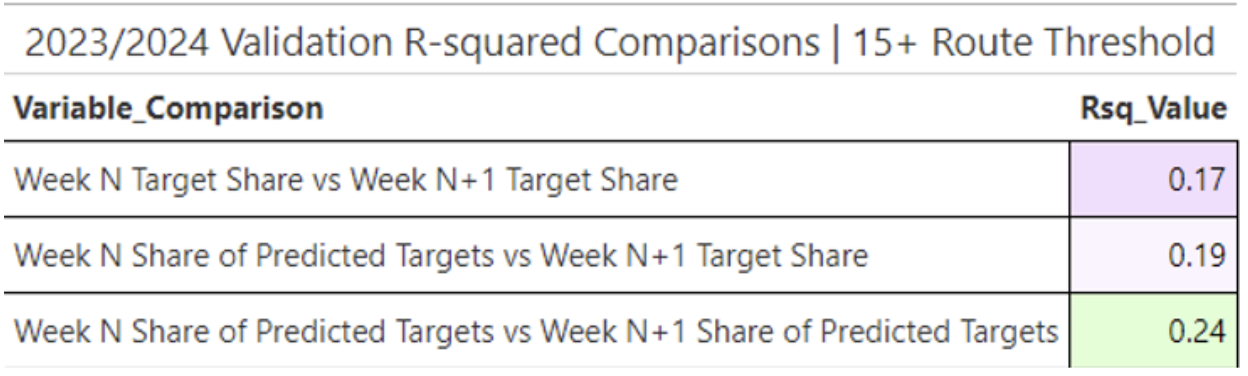

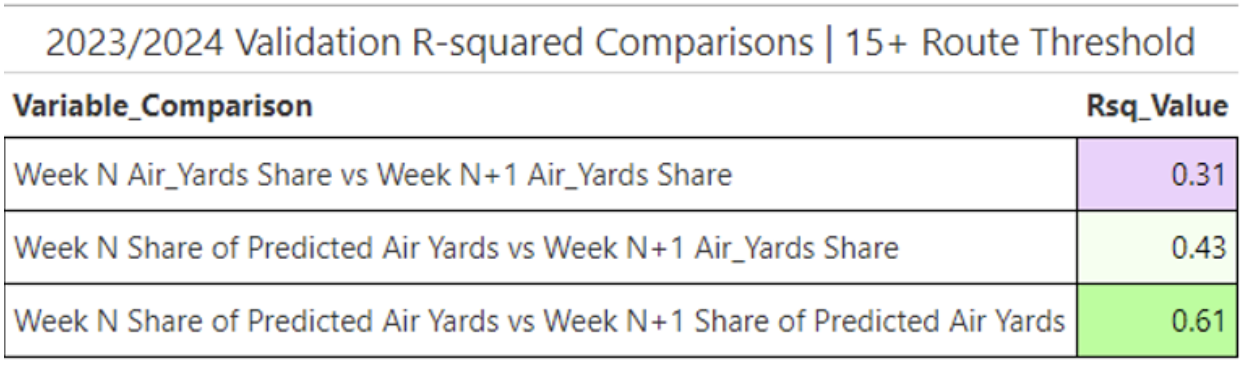

Below is the same analysis with a trigger threshold of more than 15 routes, which further refines the comparison.

Even after increasing the route threshold, we still see improved predictability for the target share of week N+1 when we use our Proportion of predicted targets variable! This is incredible.

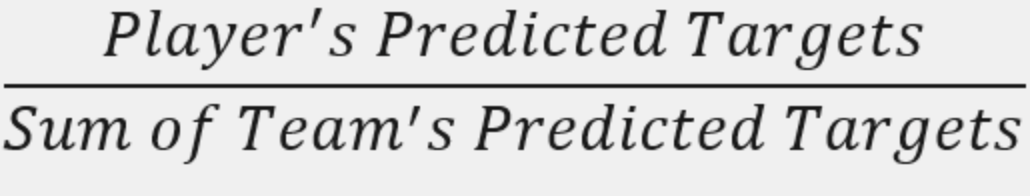

We remind you that Proportion of predicted targets equals:

The predicted target share exceeds the target share in every way!

When I shared the results with Timo, he said: “The more predictive approach sounds natural when you consider that it carries both information about whether the receiver is good or bad and information that other receivers on the team are not good or bad, because bad receivers will contribute less to the predicted share. “

Target Depth Adjustment

During a discussion with Timo, he suggested building a similar model focused on target routes to develop the Predicted Air Yards metric. This metric will help answer the question: “If the receiver were targeted, how many air yards would he gain?”

Luckily I didn’t have to start from scratch. I reused much of the existing code, changing it to include only target routes.

We now have a model of predicted air yards! Since this metric is numeric, we can calculate the tested R-squared using 2023 data. Our tested route-level R-squared is 0.23, which is strong for a play-by-play model. Typically, R-squared values improve when combined with game or season level.

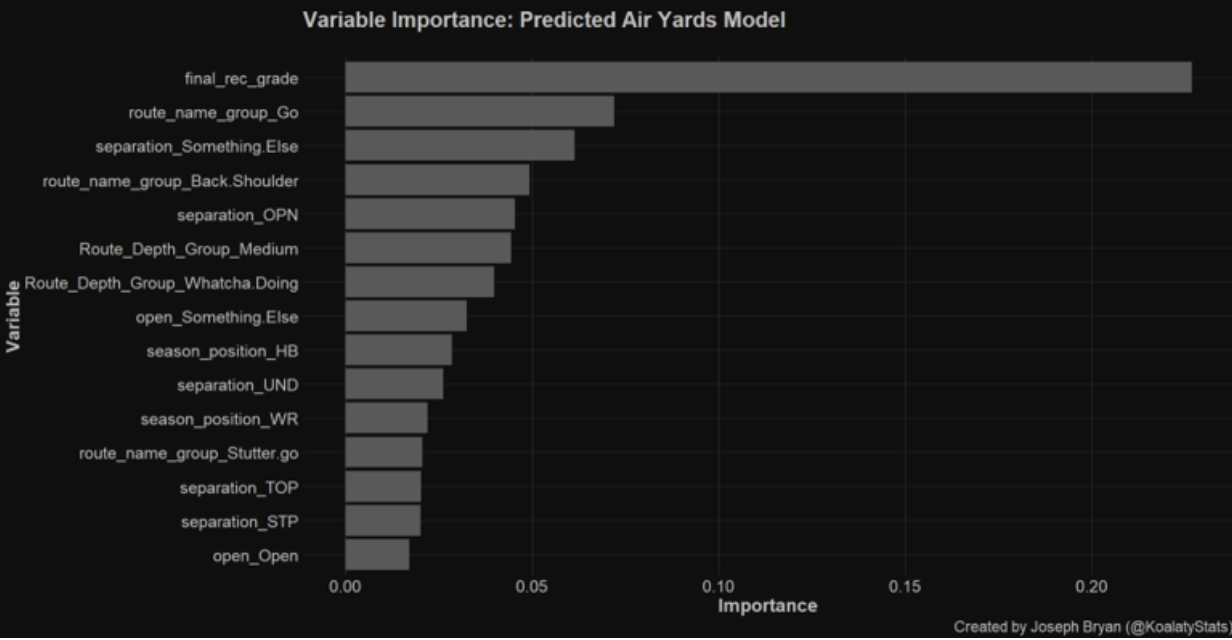

For those interested, here’s a look at the importance of the variable:

• Final_rec_grade turns out to be our most important predictor, which once again tells us that the PFF process is reliable.

• Routes show up at number 2! This makes intuitive sense.

• “Something else” because the division is the classification I made for the division, which is not easily divided into “tight”, “single stage” or “open”. Most routes that fall into this category are over the shoulder type routes where there is not much separation.

• So, Share Air Yards we can predict the share of Air Yards next week at 0.44 Rsq, which is very good and stable. As I said earlier, things aren’t much better in actual sports analytics.

•Using Share of forecast aircraft shipyards ((Projected Air Yards)/(Sum of Team Projected Air Yards)), we gain significantly more forecasting power when forecasting aircraft shipyards for the next week! In addition, we achieve greater overall stability, as when using the fraction of predicted targets.

• Using our Forecasted air yards The model allows us to increase our predictive power of Air Yards next week by 0.1 Rsq. If you don’t understand statistics, let me tell you – this is a remarkable achievement.

These trends generally continue as we increase the route threshold.

Stability metrics for our Proportion of predicted targets And Share of forecast aircraft shipyards really impressive. Each metric outperforms its “real world” counterpart in stability, better predicts the future performance of those real world counterparts, and maintains that stability even as we increase the route threshold.

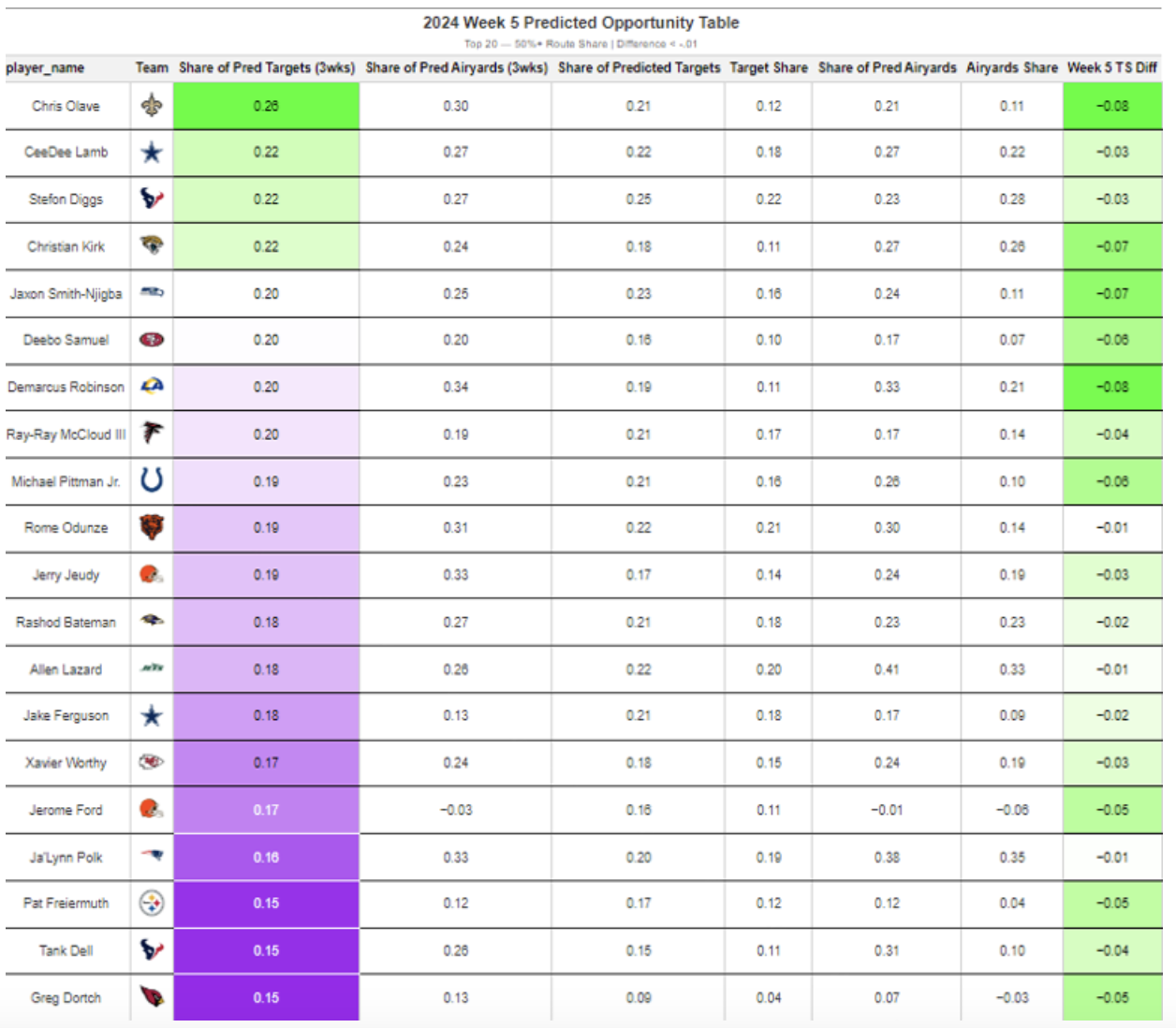

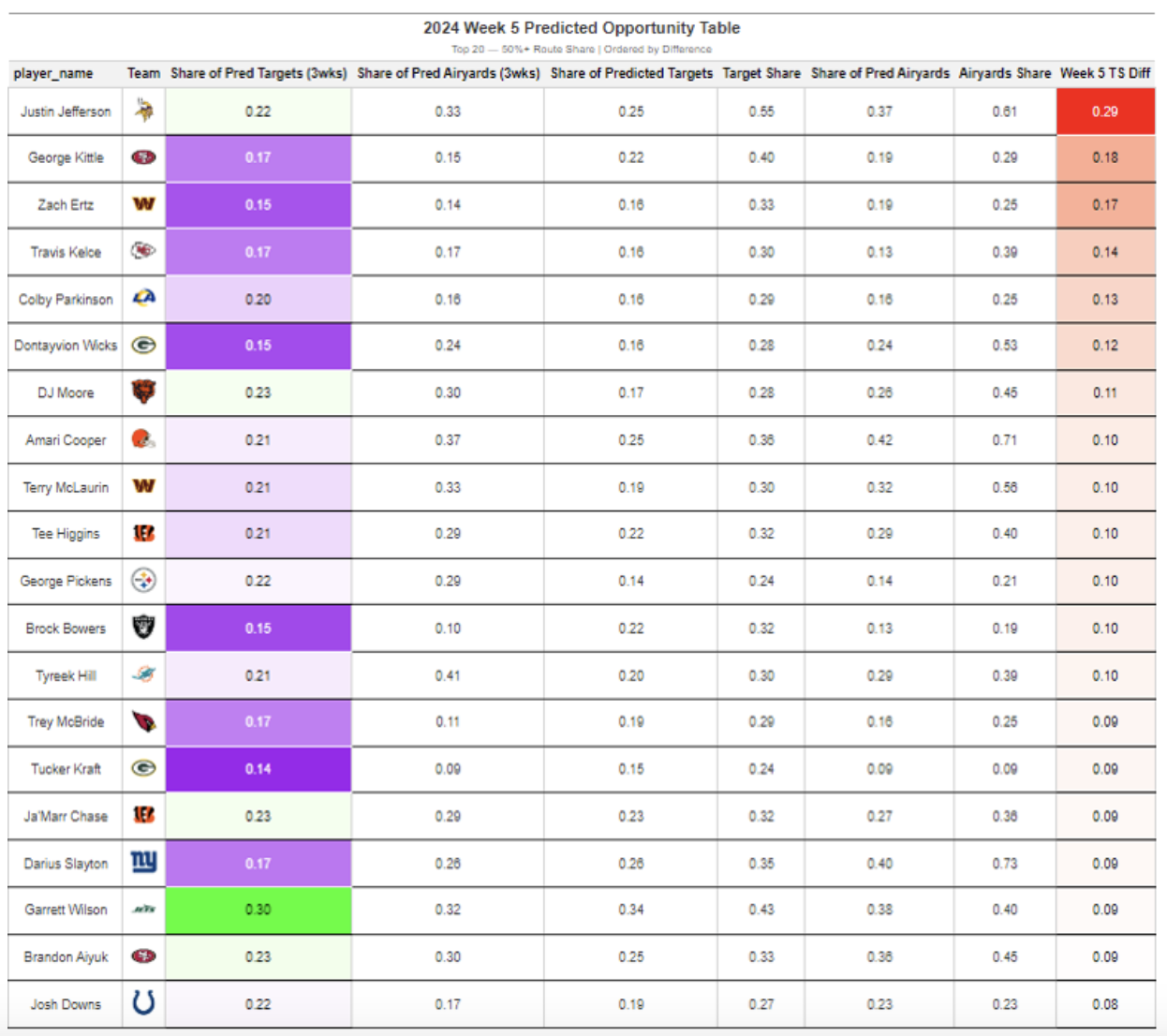

Projected Opportunity Tables for Week 5:

I’ll dip into some fantasy implications of this model in my new article that uses it and some interesting regression analyzes for forecasting breakthroughs!

Week 5 Model Review

I wanted to explore some examples of using the model last week. This gives me the opportunity to reflect on what the model could do better and show you what it sees on a weekly basis.

Editor’s Note: All screenshots taken from NFL+

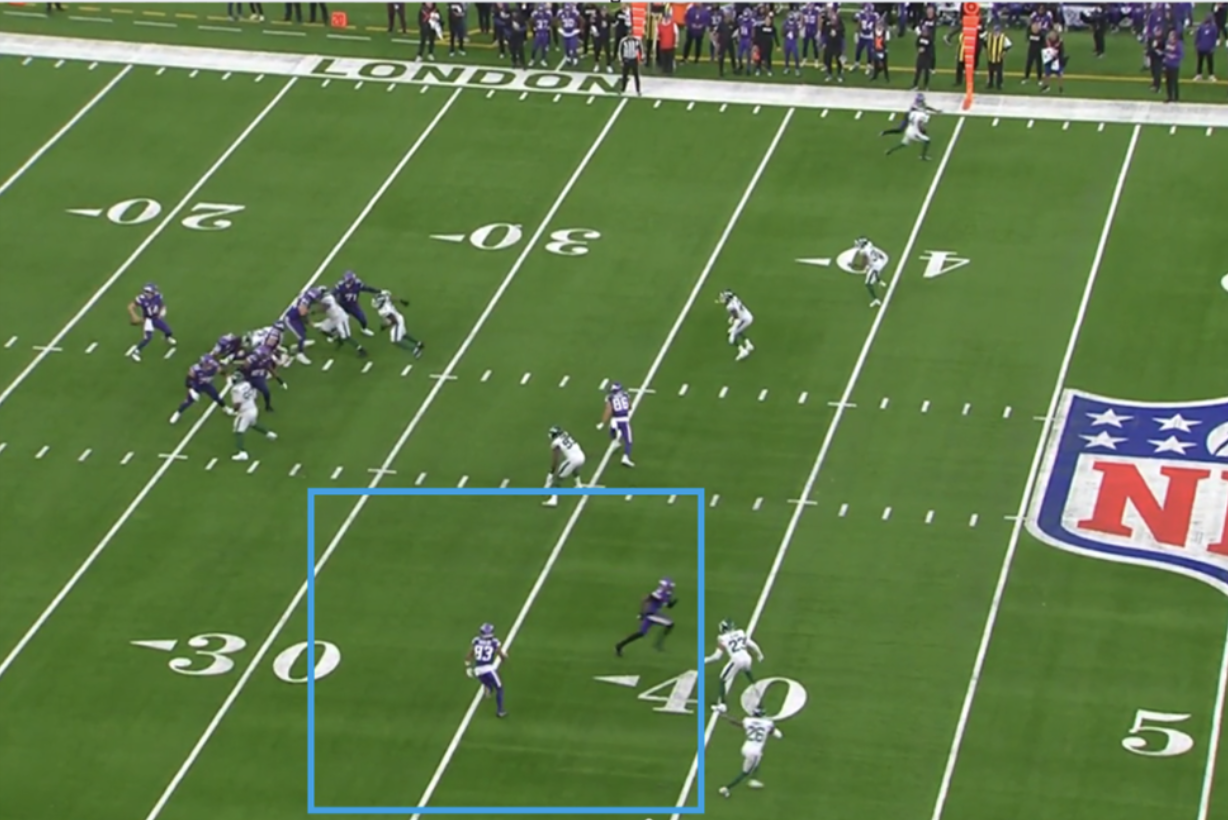

WR Jalen NaylorMinnesota Vikings: 3.4 Forecasted goals, 0 actual goals

Naylor was wide open in this game, with my model showing over a 50% chance of him being targeted. However, the pass ultimately went to Justin Jefferson. A pass interference penalty was called, but if it hadn’t been there, the Vikings might have regretted missing the opportunity to target Naylor.

Jefferson had a significant 30% difference between his projected target share and target share, highlighting how elite players tend to set targets even when other options are open.

It’s not necessarily a bad decision to target someone like Jefferson – his talent means he can play at any time – but from a purely optimal standpoint, going with Naylor might be the better choice here.

WR CeeDee LambDallas Cowboys: 7.7 projected targets, 0 actual targets

This wasn’t a bad decision by Dak Prescott at all. Honestly, I’m not sure how Brevin Spann-Ford was able to open the ball so wide for a 13-yard reception, but if CeeDee Lamb had caught the ball instead, it likely would have gone over 13 yards.

According to my model, there was a 60% chance that Lamb would be targeted, given the course of the game and the surrounding conditions. However, when the receiver is so wide open, it’s hard to give up guaranteed distance.

WR Rashad BatemanBaltimore Ravens: 7.8 projected targets, 7 actual targets 7

Lamar Jackson ended up giving it up Justice Hilland there was an offensive lag in the game. I’m not sure if Jackson knew about the penalty, but Bateman was wide open for a layup/return opportunity early in the game. There was more than a 40% chance that Bateman would be targeted.

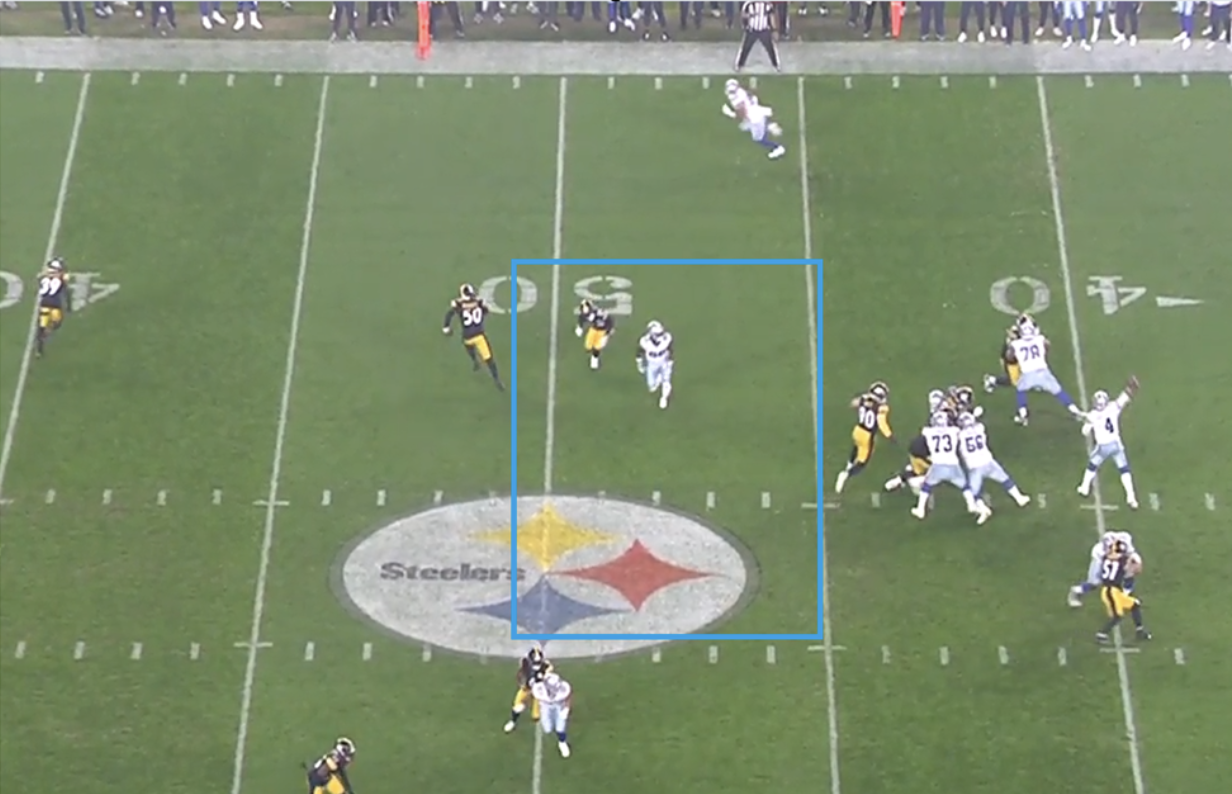

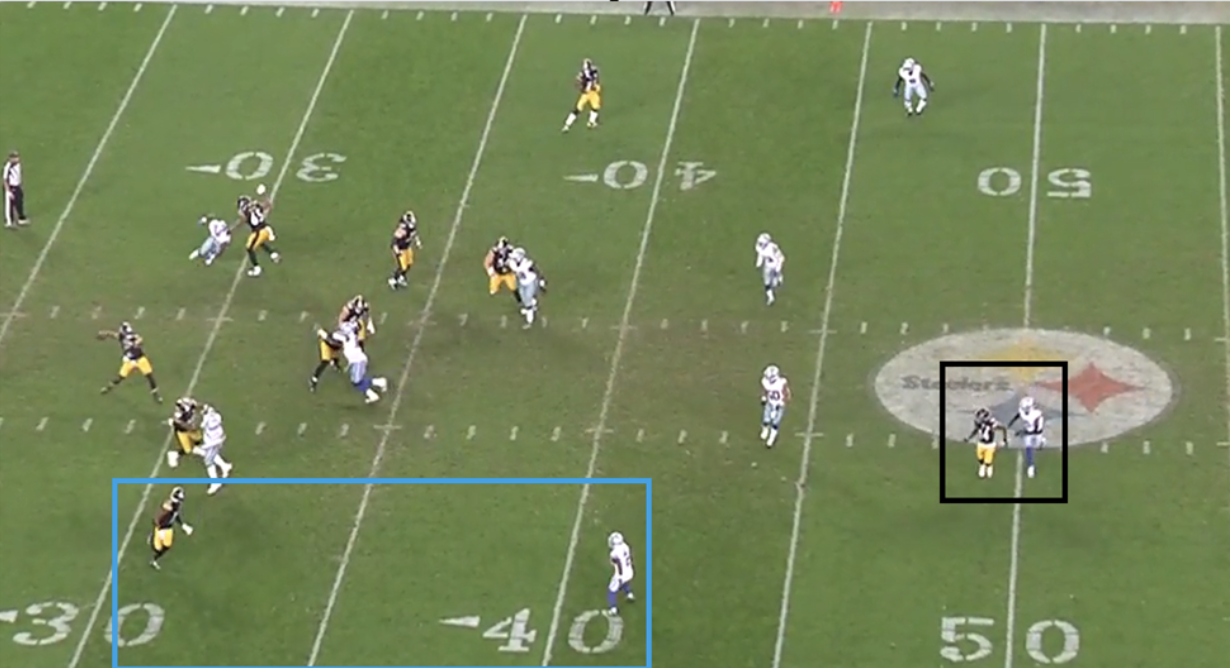

WR George PickensPittsburgh Steelers: Projected Goals 4.0, Actual Goals 6.6

Pickens became a strange target of attack on Sunday Night Football. He ran 15 qualifying routes for this model – three of his six actual target routes had less than a 25% chance of hitting the target.

In the play above Trevon Diggs was step by step with Pickens and it was quite a difficult throw. It was second and five, and Van Jefferson was wide open at the bottom to make it easy to get down first. According to my model, Jefferson had a 39% chance of being targeted and Pickens had a 6% chance.

Thanks for reading my weekly article. Hope you enjoyed it. You can follow me X/Twitter for more interesting statistical models and forecasts.

Leave a comment